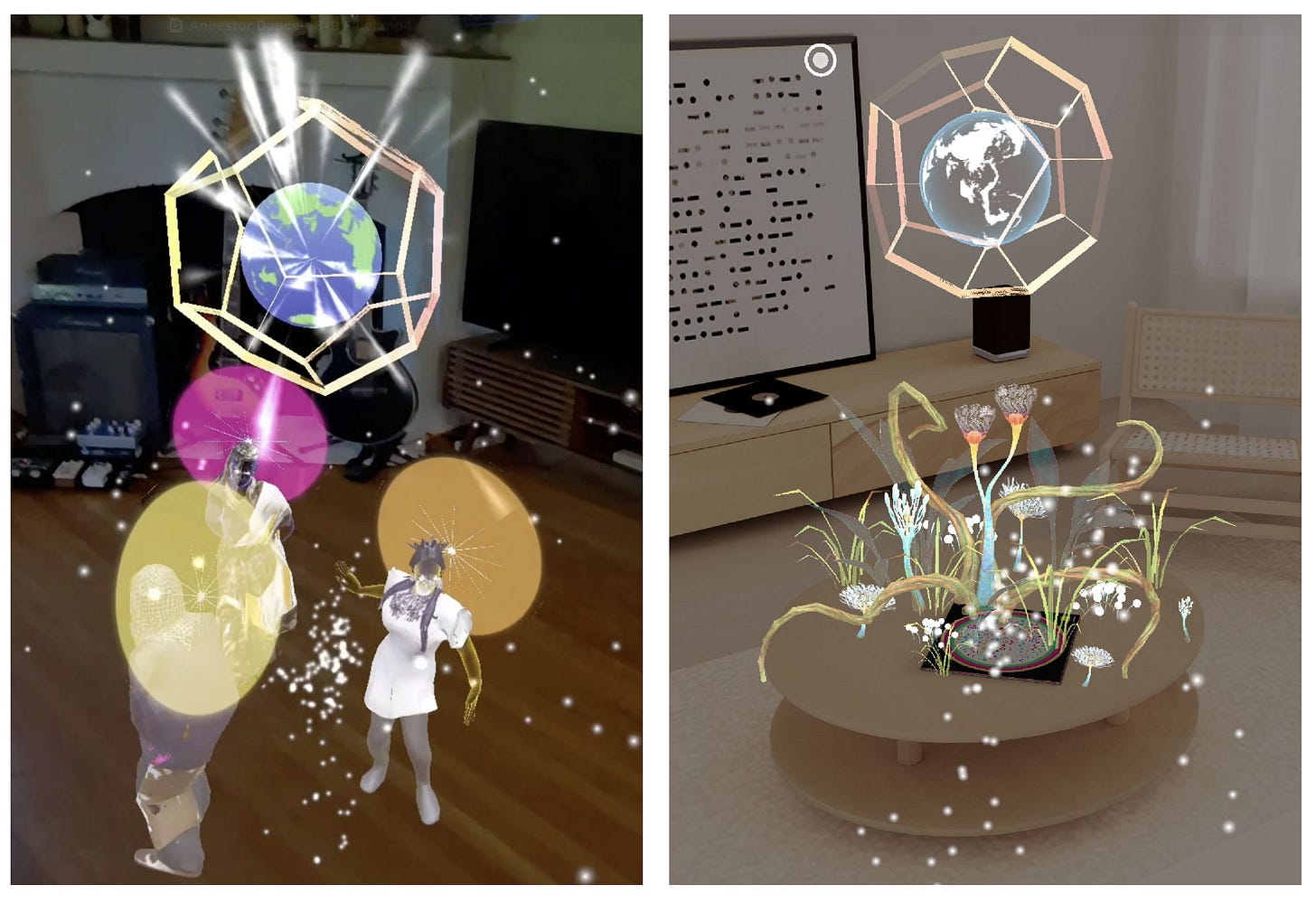

Wage Love: Ancestor Dance is a shared augmented reality ritual created for Snap Spectacles that invites participants into a ceremonial space where the past, present, and future converge. Inspired by ancestral wisdom and the urgent need to heal Mother Earth, the experience asks users to reach back—calling forth the spirits of those who came before to move, remember, and transform together.

The piece was developed during the MIT + Snap Spectacles Community Hackathon at AWE 2025. Our team was composed of Lafiya Watson, Dulce Baerga, and me!

👉 Try it now on Spectacles Gallery

We won!

In June, we entered the piece into the Snap Spectacles Community Challenge, and we’re thrilled to share that it won 3rd place. I’m always so inspired by the amazing spectacles community. It’s full of incredibly talented people that are constantly pushing the boundaries of what’s possible.

Inspiration

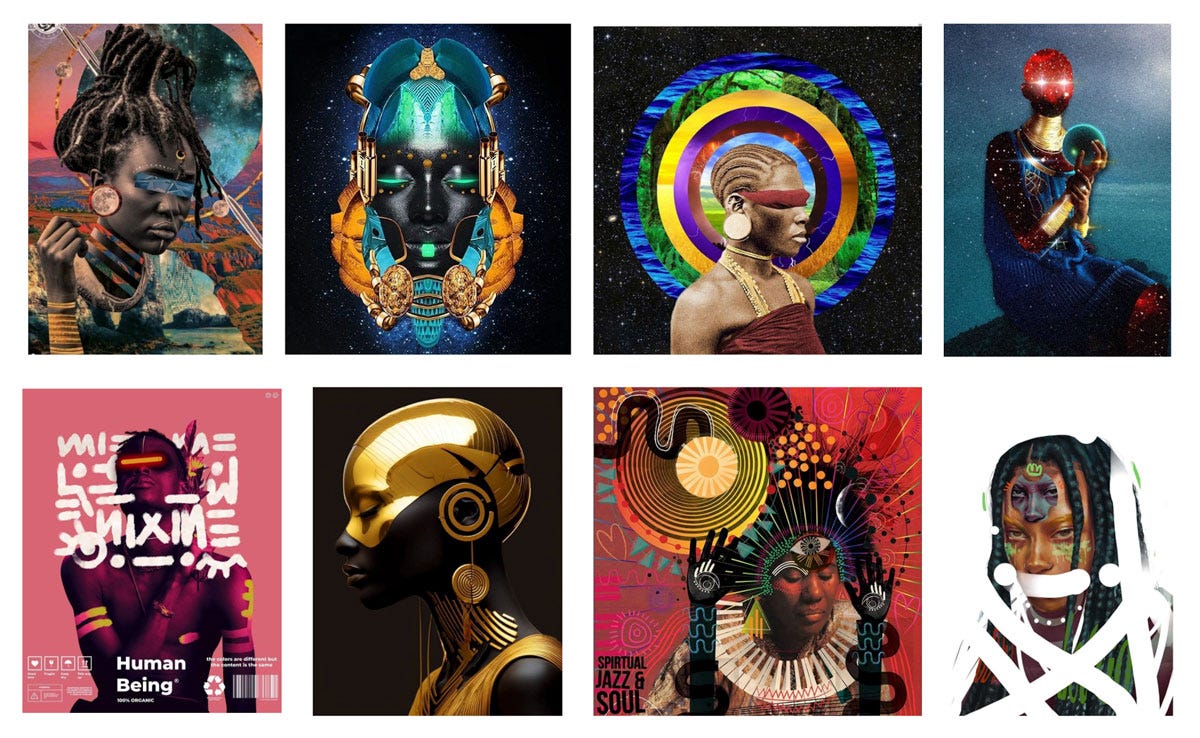

Sultan Sharrief’s ongoing Wage Love project and his exploration of personal lineage through DNA research inspired the whole project. The ancestor figures are based on real individuals from Sultan’s ancestry. The team used a series of AI tools to generate visual interpretations inspired by those ancestors, then transformed those portraits into stylized 3D models that were brought to life using motion capture data.

The Flow: How it Works

Participants summon three ancestral beings (in the form of fireflies) by speaking the word “Rise”. These luminous spirits appear, dance, and offer gifts of ancestral wisdom. Once collected, these gifts orbit the user’s wrists representing the strength and guidance of their lineage. As the experience deepens, users place their hands on the Earth, triggering a ritual of connection. The ancestors fuse into a radiant, future-facing being, and participants leave trails of flowers behind them as they move—symbolizing a shared act of healing and remembrance.

Tech + Tools

Platform: Snap Spectacles + Lens Studio

Multiplayer: Built using Snap’s Connected Lenses, Interaction Kit, and Sync Kit

Voice Input: Triggered with ASR (Automatic Speech Recognition)

Gesture Recognition: Built with 3D hand tracking

3D Modeling & Rigging: Blender, Mixamo

2D & VFX Assets: Adobe After Effects, Illustrator, Photoshop

Sound Design: Reaper

AI tools were used to generate portrait references and accelerate the 3D character base mesh creation

Optional captions enhance accessibility and can be toggled on/off

Process

We started by developing a storyboard to map out the narrative arc, user flow, and core interactions—establishing how participants would move through the ritual space, summon ancestors, and contribute to the final transformation.

To ground the experience visually and emotionally, we created art direction documents that defined the look, feel, color palette, and material inspiration—blending ancestral textures with Afrofuturist and speculative design references.

From there, we built a prototype to establish the core mechanics: voice activation, gesture recognition, and basic choreography. Once the prototype was functional, we integrated final 3D assets, materials, VFX, and audio to bring the space to life—layering emotion, memory, and movement into a cohesive, immersive ritual.

After AWE, we took some additional time to polish the experience. We added fail-safe interactions (in case the environment is too loud for speech recognition), surface tracking, optional captions, and a number of other visual and technical tweaks.

Lessons from AWE

After debuting the prototype at AWE, we took time to refine. Here’s what we learned:

Loud spaces bring challenges: Voice over and speech recognition interactions don’t work very well in crowded spaces.

So, creating backup interactions (like tap selection or hand gestures) is essential

(Optional) Captions are important, especially for accessibility and exhibition environments

Always build for failure: graceful degradation makes or breaks live immersive work

Don’t skip the polish phase—finishing touches make the magic

Big Thanks!

BIG THANKS to all the beautiful people that supported us: Sultan Sharrief, Kevin VanderJagt, Blair Adams, Brielle Garcia, and many more.

BIG THANKS to all the institutions that supported us: Quasar Lab at MIT, Reality Hack, Snap Spectacles, and the Narrative & Emerging Media program at ASU.